The choice of transformation functions

There are many ways to transform the output from a set of sensor sensitivities like ours to another, like the CIE XYZ system. The most common transform consists on finding a 3x3 matrix that would do the trick:

Where X,Y,Z are the tristimulus values of the CIE (1931) colour system and R,G,B are the values (quantal catch) obtained from our camera model. The 3x3 conversion matrix M is relatively easy to obtain, doing:

M= CIEXYZ' * (Sensors);

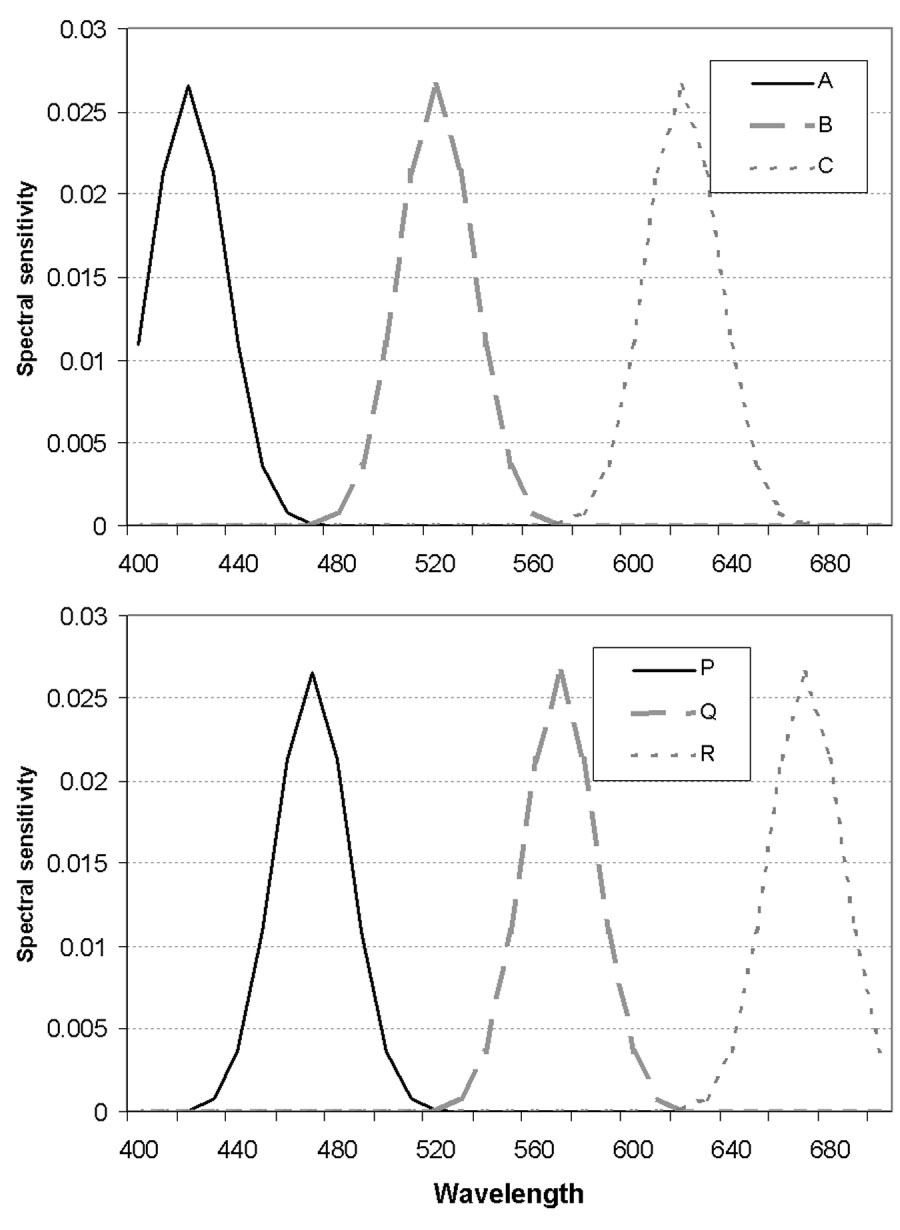

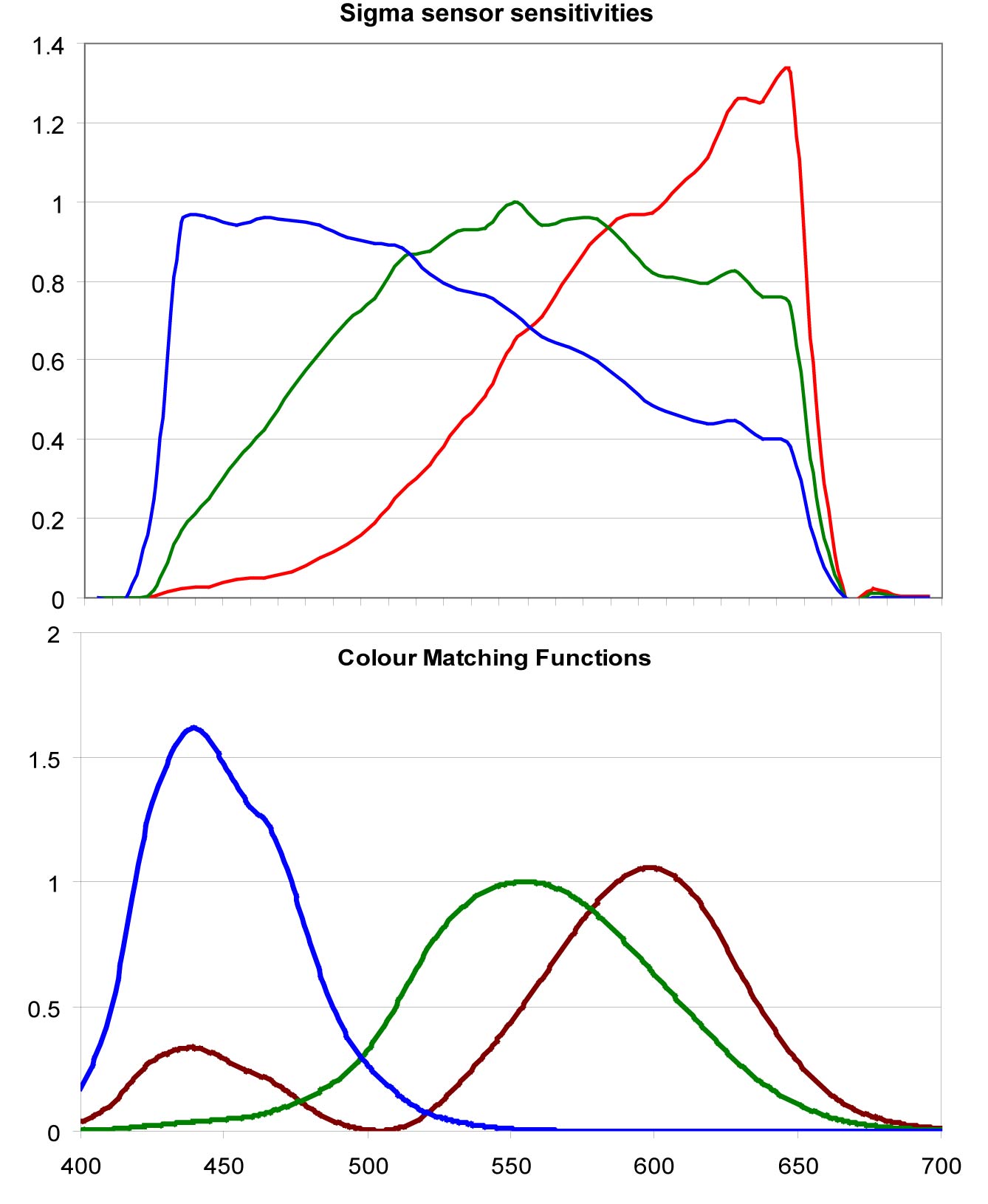

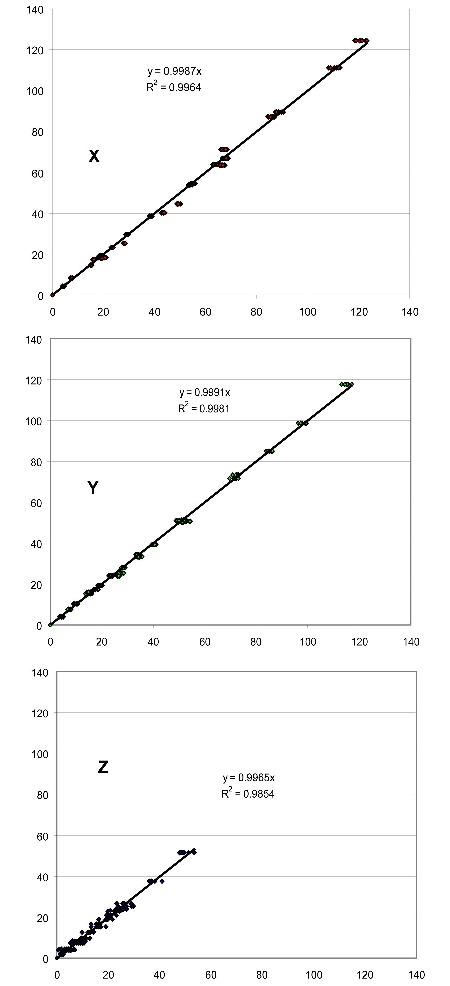

where CIEXYZ is the (31x3) matrix of CIE1931 Colour Matching Functions and Sensors are the (31x3) sensor sensitivity functions of the camera. Figure 3 (below) shows the results of comparing the XYZ values obtained by using the simple matrix transformation above to those measured by the spectroradiometer for all 24 Macbeth patches at photographed at 10 different integration times. The results (especially for the blue sensor) are not very encouraging. As mentioned before a simple 3x3 matrix transformation cannot capture the complexity of the problem.

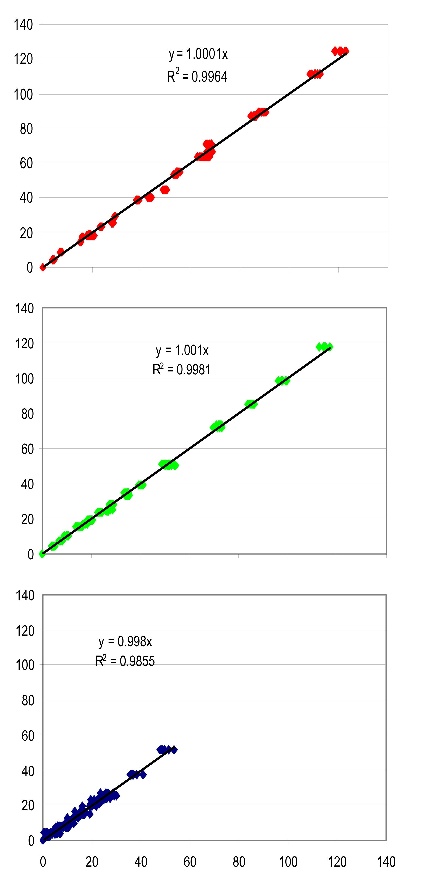

A second approach consists on adjusting the values of M to fit the calculated data to the measured data. Figure 4 shows the results from this approach

A third approach consisted on replacing the 3x3 matrix by a polynomial function of the form:

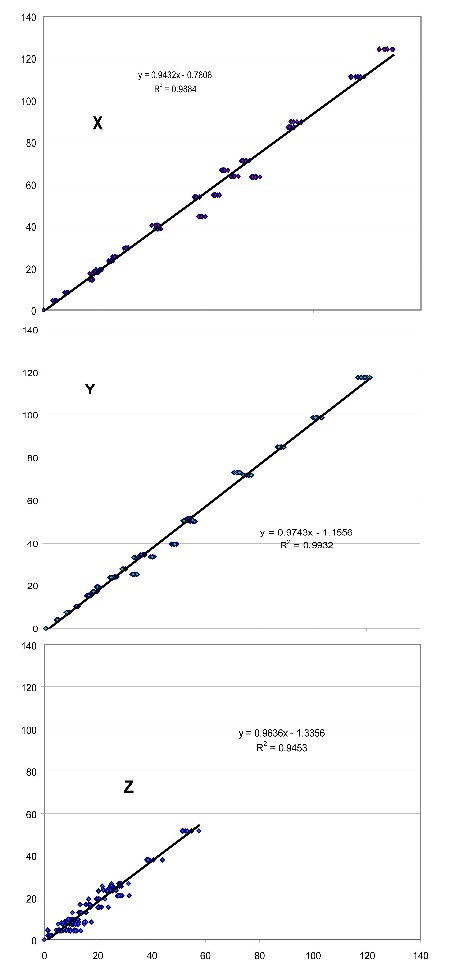

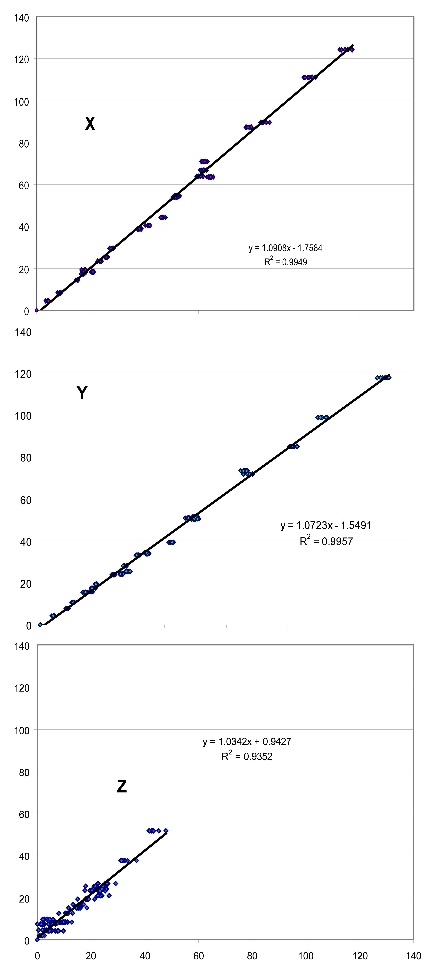

Where K is also defined in terms of aperture, focal length and integration time and the coefficients M are determined by fitting the data to some known dataset. The advantages of defining parameters M in terms of the type of data we want to photograph becomes evident in the example below. Suppose we want to adjust our camera output so that it matches all colours defined in the Munsell chart (their spectral reflectances are easily available). We could calculate the RGB values that such colours would produce when illuminated by a standard light and photographed by our camera (in theory), calculate their XYZ values using the CIE 1931 colour matching functions and then find the best set of parameters M for those values. This will provide a universal solution, adjusted to all colours and containing large errors in some parts of the colour space. Now suppose we are interested in just a sub-sample of all the colours of the world such as the most common colours present in nature. Then we could repeat the procedure using a database of spectral reflectances of natural objects and natural illuminations (which are also available). Then our camera RGB-to-XYZ transformation would be optimised for those combinations of colours and illuminations. Figure 5 (below) shows a comparison of the XYZ values obtained from the Macbeth card by our camera model after parameters M were optimised for the whole of the Munsell book. Similarly, Figure 6 was obtained with the camera optimised for a database of Northern-European natural reflectances (Parkkinen et al ``Spectral representation of colour images,'' IEEE 9th International Conference on Pattern Recognition, Rome, Italy, 14-17 November, 1988, Vol. 2, pp. 933-935. ).

The Matlab functions used to convert from camera space to CIE XYZ space are here.

Comparison of the XYZ values obtained by using the simple matrix transformation (displayed on the abscissas) against those measured by the spectroradiometer (displayed on the ordinates) for all 24 Macbeth patches at photographed at 10 different integration times. Plots correspond to each of the X, Y and Z tristimulus values.

Comparison of the XYZ values obtained by using the simple matrix transformation (displayed on the abscissas) against those measured by the spectroradiometer (displayed on the ordinates) for all 24 Macbeth patches at photographed at 10 different integration times. Plots correspond to each of the X, Y and Z tristimulus values.  Comparison of the XYZ values obtained by using the adjusted polynomial transformation (displayed on the abscissas) against those measured by the spectroradiometer (displayed on the ordinates) for all 24 Macbeth patches at photographed at 10 different integration times. Plots correspond to each of the X, Y and Z tristimulus values. The polynomial parameters were adjusted to optimally fit the Macbeth chart data. Plots correspond to each of the X, Y and Z tristimulus values.

Comparison of the XYZ values obtained by using the adjusted polynomial transformation (displayed on the abscissas) against those measured by the spectroradiometer (displayed on the ordinates) for all 24 Macbeth patches at photographed at 10 different integration times. Plots correspond to each of the X, Y and Z tristimulus values. The polynomial parameters were adjusted to optimally fit the Macbeth chart data. Plots correspond to each of the X, Y and Z tristimulus values.  Comparison of the XYZ values obtained by using the polynomial transformation (displayed on the abscissas) against those measured by the spectroradiometer (displayed on the ordinates) for all 24 Macbeth patches at photographed at 10 different integration times. The polynomial parameters were adjusted to optimally match the Munsell book data. Plots correspond to each of the X, Y and Z tristimulus values.

Comparison of the XYZ values obtained by using the polynomial transformation (displayed on the abscissas) against those measured by the spectroradiometer (displayed on the ordinates) for all 24 Macbeth patches at photographed at 10 different integration times. The polynomial parameters were adjusted to optimally match the Munsell book data. Plots correspond to each of the X, Y and Z tristimulus values. Comparison of the XYZ values obtained by using the polynomial transformation (displayed on the abscissas) against those measured by the spectroradiometer (displayed on the ordinates) for all 24 Macbeth patches at photographed at 10 different integration times. The polynomial parameters were adjusted to optimally match a database of natural objects' reflectances data. Plots correspond to each of the X, Y and Z tristimulus values.

Comparison of the XYZ values obtained by using the polynomial transformation (displayed on the abscissas) against those measured by the spectroradiometer (displayed on the ordinates) for all 24 Macbeth patches at photographed at 10 different integration times. The polynomial parameters were adjusted to optimally match a database of natural objects' reflectances data. Plots correspond to each of the X, Y and Z tristimulus values.