A grey ball to estimate the chromaticity of the illuminant

For the grey ball to fulfil its purpose it should have certain characteristics:

For the grey ball to fulfil its purpose it should have certain characteristics:

a) it has to be painted of a relatively dark colour (so it does not saturate under sunlight)

b) it has to reflect light in a spectrally uniform manner within the camera' s sensor's spectral sensitivity range (420-670 nm)

c) It should not produce strong specularities, even under sunlight (this type of reflection is called "diffuse" or "Lambertian")

d) Its colour should last for at least one photographic session

e) It should be relatively easy to replace once its colour has faded (cheap to make)

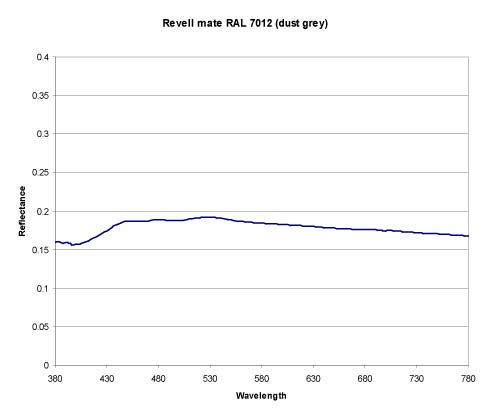

Taking into account all these conditions and constraints, we built a grey ball from a plastic sphere of 35mm in diameter and fixed it at the end of a supporting rod (about 475 mm away from the camera). The ball was painted with several thin coats of Revell mate dust grey RAL 7012, with an albedo of 0.18 (see spectral reflectance below). Click here to download the values in Excel format.

The Dataset

A table linking to the actual database is shown below. It is possible to inspect each image by clicking on the figures at the end of this page. Individual images are for inspection purposes only (all .jpg files are not calibrated!). To download the calibrated imagery you have to download the large .zip files from the table and decompress them. Then you will find two directories, one with uncalibrated pictures (called pics) and another with files in matlab (.mat) format containing individual calibrated images.

| Urban scenery | CIE1931XYZ | Stockman & Sharpe (2000) LMS | Smith & Pokorny (1975) LMS | Uncalibrated JPG |

|---|---|---|---|---|

| Forest / Motorways | CIE1931XYZ | Stockman & Sharpe (2000) LMS | Smith & Pokorny (1975) LMS | Uncalibrated JPG |

| Snow & Seaside | CIE1931XYZ | Stockman & Sharpe (2000) LMS | Smith & Pokorny (1975) LMS | Uncalibrated JPG |

| Natural objects 01 | CIE1931XYZ | Stockman & Sharpe (2000) LMS | Smith & Pokorny (1975) LMS | Uncalibrated JPG |

| Natural objects 02 | CIE1931XYZ | Stockman & Sharpe (2000) LMS | Smith & Pokorny (1975) LMS | Uncalibrated JPG |

| Natural objects 03 | CIE1931XYZ | Stockman & Sharpe (2000) LMS | Smith & Pokorny (1975) LMS | Uncalibrated JPG |

| Natural objects 04 | CIE1931XYZ | Stockman & Sharpe (2000) LMS | Smith & Pokorny (1975) LMS | Uncalibrated JPG |

Information about all picture's original headers (in matlab .mat format) can be downloaded from here.

References (please cite the most appropriate reference when using these images):

Parraga, C. A., Baldrich, R. & Vanrell, M. Accurate Mapping of Natural Scenes Radiance to Cone Activation Space: A New Image Dataset. in CGIV 2010/MCS'10 - 5th European Conference on Colour in Graphics, Imaging, and Vision - 12th International Symposium on Multispectral Colour Science. (Society for Imaging Science and Technology ).

Parraga, C. A., Vazquez-Corral, J., & Vanrell, M. (2009). A new cone activation-based natural images dataset. Perception, 36(Suppl), 180.